Uploading and Processing Footage

This instructional video outlines how users of VisualCortex's SaaS Platform (platform.visualcortex.com) can independently uploading and process footage:

Prerequisites: Create an account on platform.visualcortex.com

This 'How To' video is designed for users who have already created an account on the public-facing self-service version of our Video Intelligence Platform, https://platform.visualcortex.com/

If you haven't already created an account, visit the above url and click on the 'Sign Up' link (see below):

To create your VisualCortex account, simply:

- Enter your company name

- Agree to the VisualCortex Terms of Service and Privacy Policy

- Sync with your Google or Microsoft account

Sign-up trouble-shooting tips:

- Your Company Name is already taken: Contact the creator of that Organization on platform.visualcortex, so that they can add you to it. Or, reach out to support@visualcortex.com with the Company Name and we'll reach out to the creator on your behalf

- You don't want to sync your VisualCortex account to a Google or Microsoft account: Email support@visualcortex.com with the Company Name and Business Email you'd like to use. We'll then create an account for you. Follow-up instructions for activating your account will follow.

Uploading footage to platform.visualcortex

Navigate to the '+ New' button in the top right of your home page, and click on create 'New Folder'. Give it a name that relates to the type of footage you'll be adding to it.

Once in that new folder, click the '+ New' button again, and select 'New Virtual Camera'. in VisualCortex, a 'Virtual Camera' is the name given to piece of footage that relates to a particular source and field-of-view.

Give your Virtual Camera a practical name, which clearly describes the footage. Select the Processing Location closest to you to ensure faster processing speeds.

There are four steps to complete get the most from your new Virtual Camera to generate video analytics:

1. Add footage

2. Select the Machine Learning Model you want to apply to your chosen footage

3. Chose and position an 'Event Definition' within the footage's field-of-view

4. Create charts and dashboards that help you see and act on generated video analytics

Let's walk through precisely how this is done.

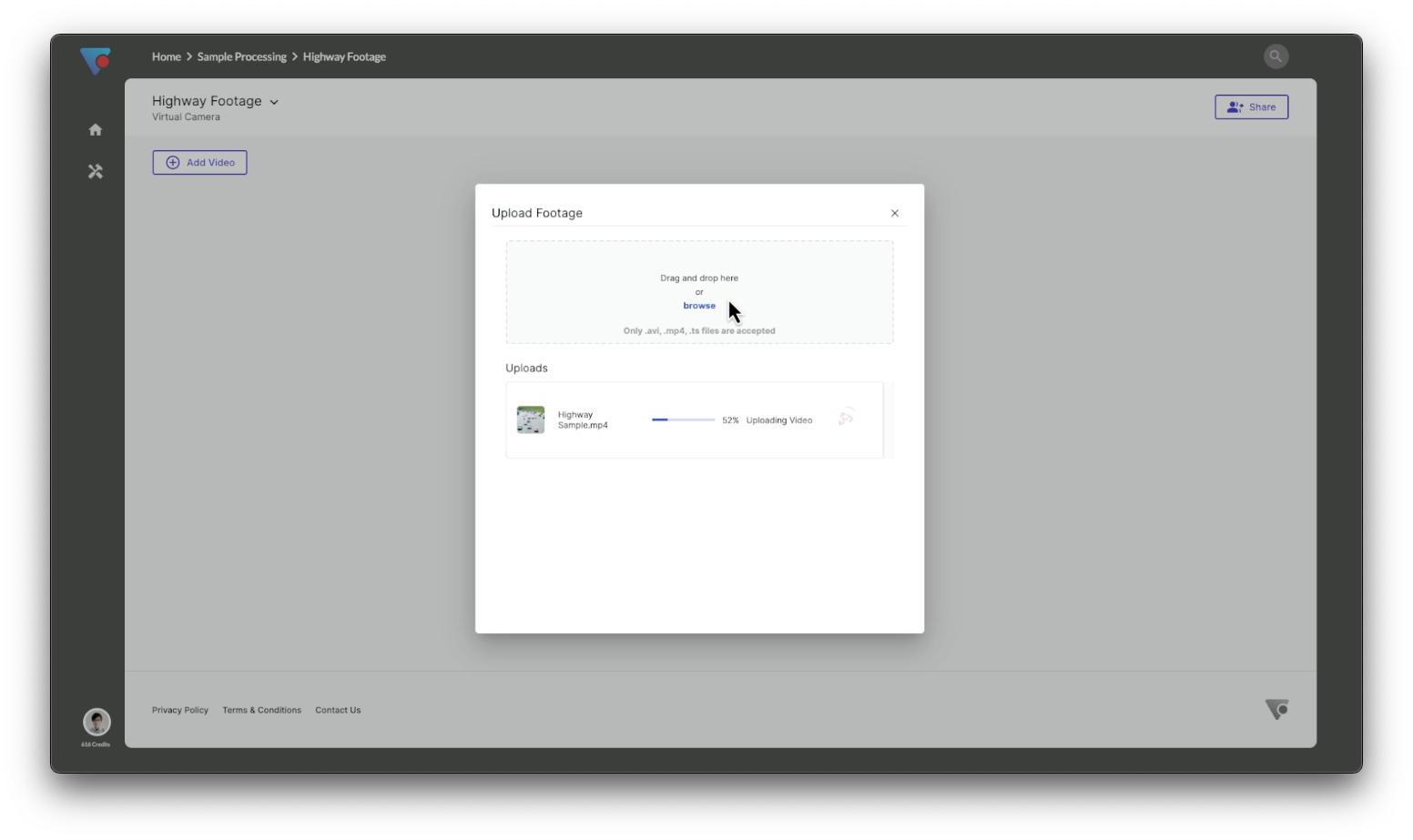

Adding footage to your Virtual Camera

Once you've chosen the Name and Processing Location of your Virtual Camera, you'll be prompted to upload footage (you can do this by clicking the 'video' button in the center of the screen, or using the 'Add Video' button in the top left of the Virtual Camera.

Drag and drop selected footage onto the pop-up lightbox (supported file types include .avi, .ts, .mp4, .webm, .mkv, .wmv)

A progress bar gives you an indication of upload in real-time

Selecting your Machine Learning Model

Once your footage has uploaded, add a Model to apply to your footage, in order to generate your desired video analytics

Next, choose a Machine Learning (ML) Model that contains the objects you want to track (eg, if you're looking to detect and analyze foot traffic, you'll likely want the Pedestrian Model):

After choosing your desired ML Model, select the size of that Model you'd like to use. Large ML Models cost more credits and take more time to process, but can detect objects more accurately and at greater distance.

Selecting and configuring Event Definitions

Once you've saved your desired presets, you'll return to the Virtual Camera home page, where it's time to select your Event Definition. There are two main types available: Directional Counts and Zones.

To configure Directional Counts as an Event type, you need to position the virtual line suitably within the frame, so that the objects you intend to count will cross that line. It's important to reduce the angle at which objects cross the line where possible, and to ensure sufficient space before and after the line, so that the objects can be accurately detected.

As well as dragging the line across the field-of-view, you can add and remove multiple points / sections to your line, to help create desired angles, as well as flip the entry / exit direction.

During set-up, the control panel on the left-hand-side lets you zoom and rest the canvass view, assisting you to optimally position your Event Definitions in the video frame's field-of-view.

Below the Event Definition Canvass, there are a further three fields for to help label the detections you're generating.

The first is an Event Definition Name, which should clearly and simply describe the type of footage and the objects or actions being detected and tracked. This will make reporting and visualizing the generated metadata quick and easy.

Then, name your entry and exit points, so as to assist with the ability to understand detections. When creating dashboards (see how Dashboards 'How To' guide for details), consistently and clearly naming entry and exit points for directional counts will enable you to create meaningful aggregations of combined entry and exist data from across multiple zones.

The other main type of Event Definition for counting-based video analytics, are Zones.

Drag the points at each corner of your Zone to configure it carefully over the area in which you want detections to be captured. Use the same Control Panel at the bottom of the canvass to add, remove or reset your points to help customize your Zone.

Processing footage

After uploading footage, selecting your Model and setting your Event Definitions, it's time to process your video. To do so, head back to the Virtual Camera home page, and click on the blue 'Process Video' button at the top of the screen.

Firstly, select the specific video you wish to process. Over time, you may upload numerous videos to the one Virtual Camera, which makes this first step more important moving forward.

Next, select the ML Model you want to use. Again, overtime you may have applied several Models to footage within any give Virtual Camera. So paying close attention at step two will also being increasingly important over time.

Step Three is the Checkout phase. Here, you'll be given an estimate of the number of credits your processing request will consume, as well as how many total credits you have available. Please note that Step Two also provides an overview of credits per minute of video processed for each of the available Models.

Once checked out, processing will begin immediately. A progress bar indicates how much time is left.

Exploring Results

Next, you can explore the results of your processed footage. To do so, navigate to the 'Explore Results' button on your Virtual Camera homepage.

This button contains two important functions: 'Visualize video' and 'Explore charts'. Visualizing your video let's you playback the post-processed footage, with an overlay of all the detections as they occur.

After clicking 'Visualize video', another pop-up lists a number of options. Here, you can reconfirm the footage, Model and Event Definition you wish the VisualCortex Visualizer to display during playback.

Next, you can choose to apply filters to the types, or 'classes', of detections you want to visualize. For example, the VisualCortex Vehicle Model contains a many different types of vehicles -- cars, buses, trucks, etc. When visualizing or graphing / reporting detections, you can choose to exclude / include certain types.

The final option, before rendering your video, is the ability to select a specific duration of video you wish to display via the VisualCortex Visualizer. You can either choose to leave it at the default setting, which will render and visualize detections for the full video, or select a specific segment.

Once the video has finished rendering, a thumbnail will appear in the Visualizer pop-up window. Click on that thumnail to play the rendered video.

The rendered produced by the VisualCortex Visualizer includes both Bounding Boxes around detected objects, as well as metrics produced from the configured Event Definition (right hand side).

The second function for displaying and assessing results is VisualCortex's in-platform charts. To build, analyze and share charts, navigate back to the Virtual Camera homepage, click the 'Explore results' button, then select the 'Explore charts' option.

Select the chart type you want to use from the options on the right hand side. Group data displayed by grouping it by Class or Direction (see drop-down filter on right hand side).

And, because video analytics has been performed across the footage's entire field-of-view, you can even reposition the Event Definition to dynamically return new counts on-the-fly for different regions within that field-of-view.

Related Articles

Uploading and Processing Footage

This instructional video outlines how users who are assigned Administrator Roles within VisualCortex's SaaS Platform (platform.visualcortex.com) can independently add and edit Users and User Groups: Prerequisites: Create an account on ...Building Dashboards and Analyzing Results

This instructional video outlines how users of VisualCortex's SaaS Platform (platform.visualcortex.com) can build dashboards and analyse results from their processed footage: Prerequisites: Upload and process footage on platform.visualcortex.com This ...